Orientation network

Overview

The aim of the project is to detect object-aligned bounding box based on axis-aligned bounding box. Axis-aligned bounding box is a common output of object detection methods. Due to large variations in poses of animals in images especially for underwater images, axis-aligned box is not sufficient for furhter analysis of the detected animal, e.g. identification/matching of specific individual. Detecting orientation and object-aligned box allows to normalize poses of the animals to a commont view by croping and rotating images. The project trains a convolutional neural network to learn oriented rectangles that provide the direction of the animal.

The method is generic and not species specific. The same model architecture and training schedule are applied to seven species including turtles, manta rays, whale sharks, right whales and sea horses.

The source code, implementation details and results are available at github.

Deliverables

- The project is completed for WildMe/WildBook based on their data.

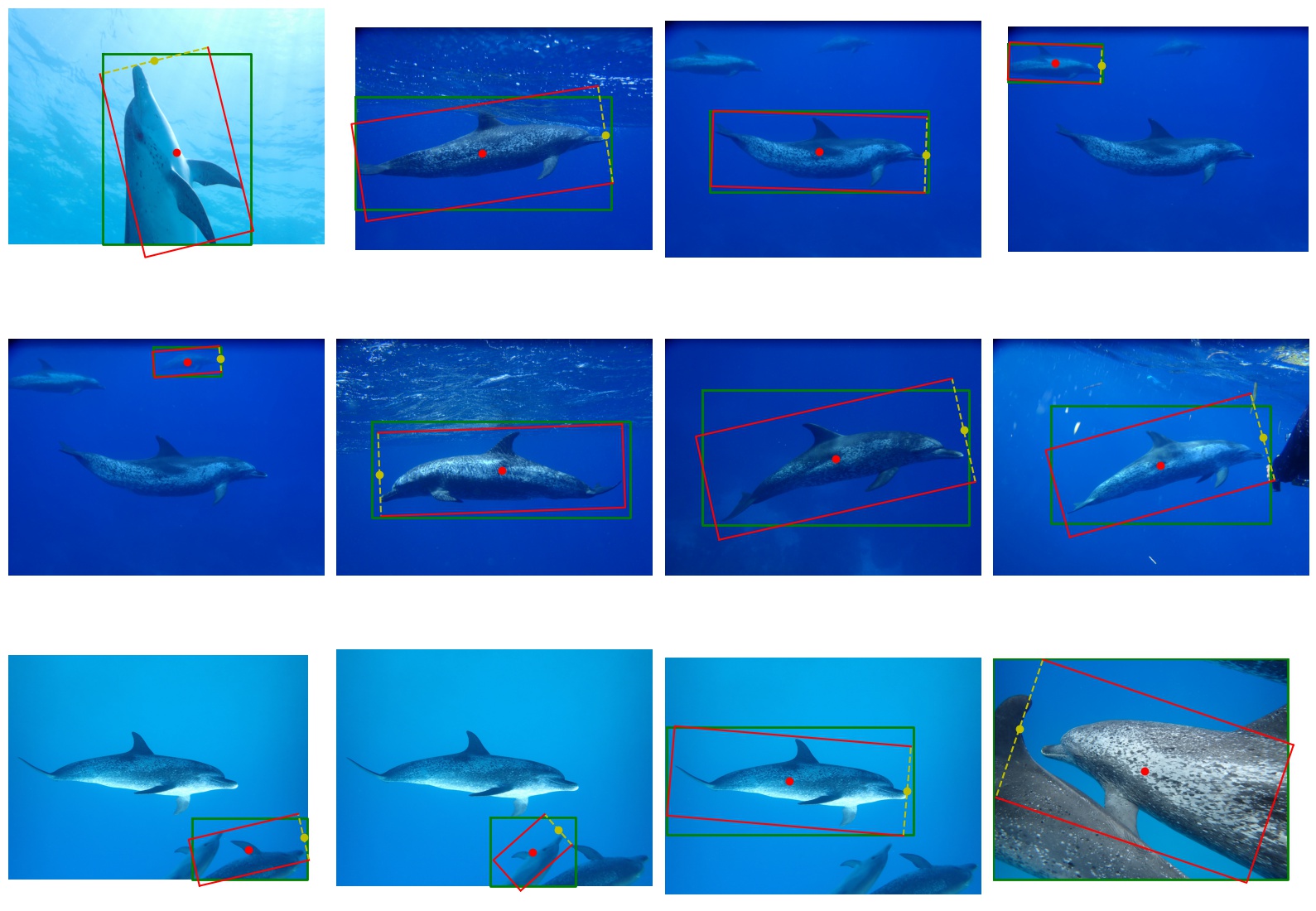

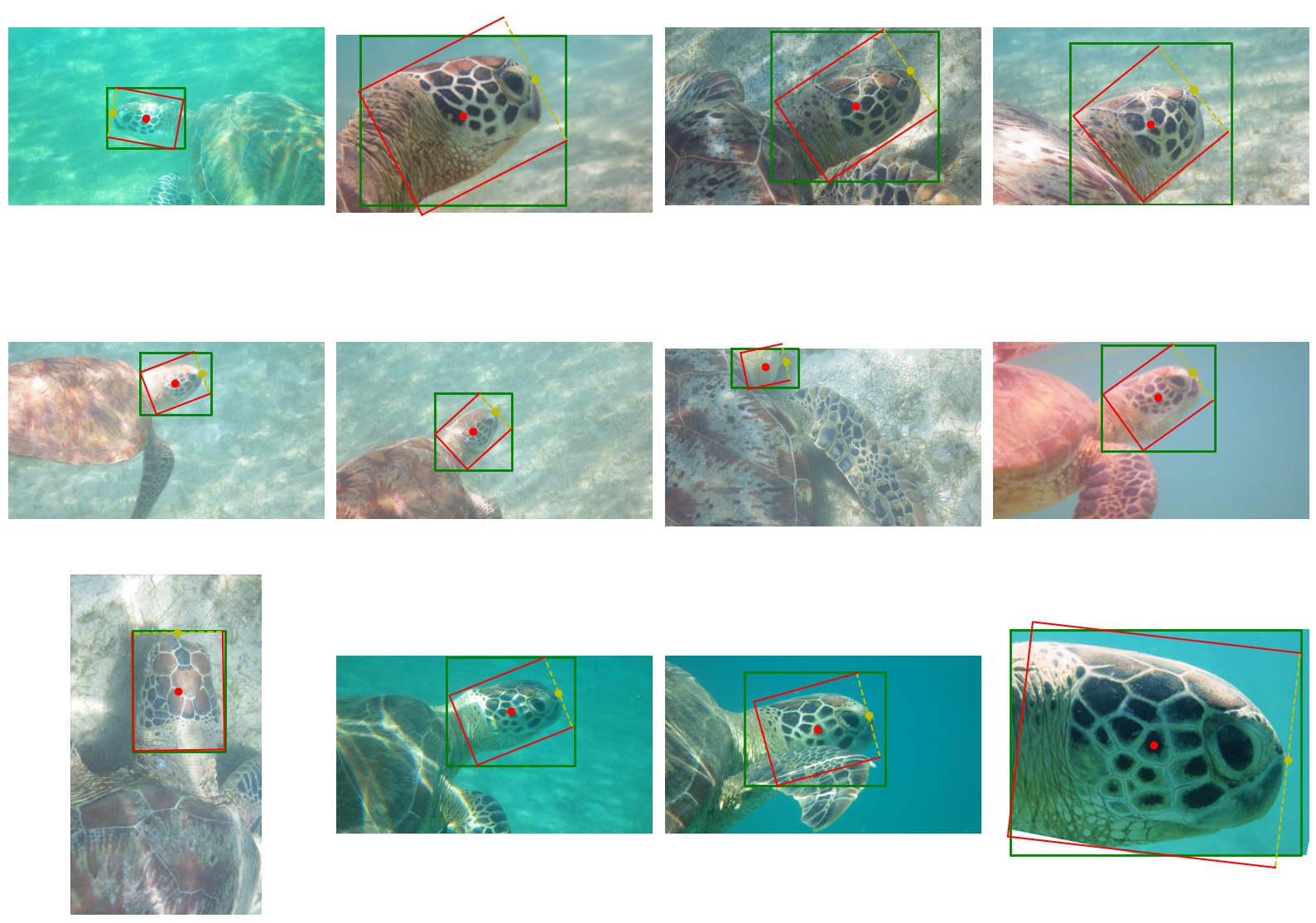

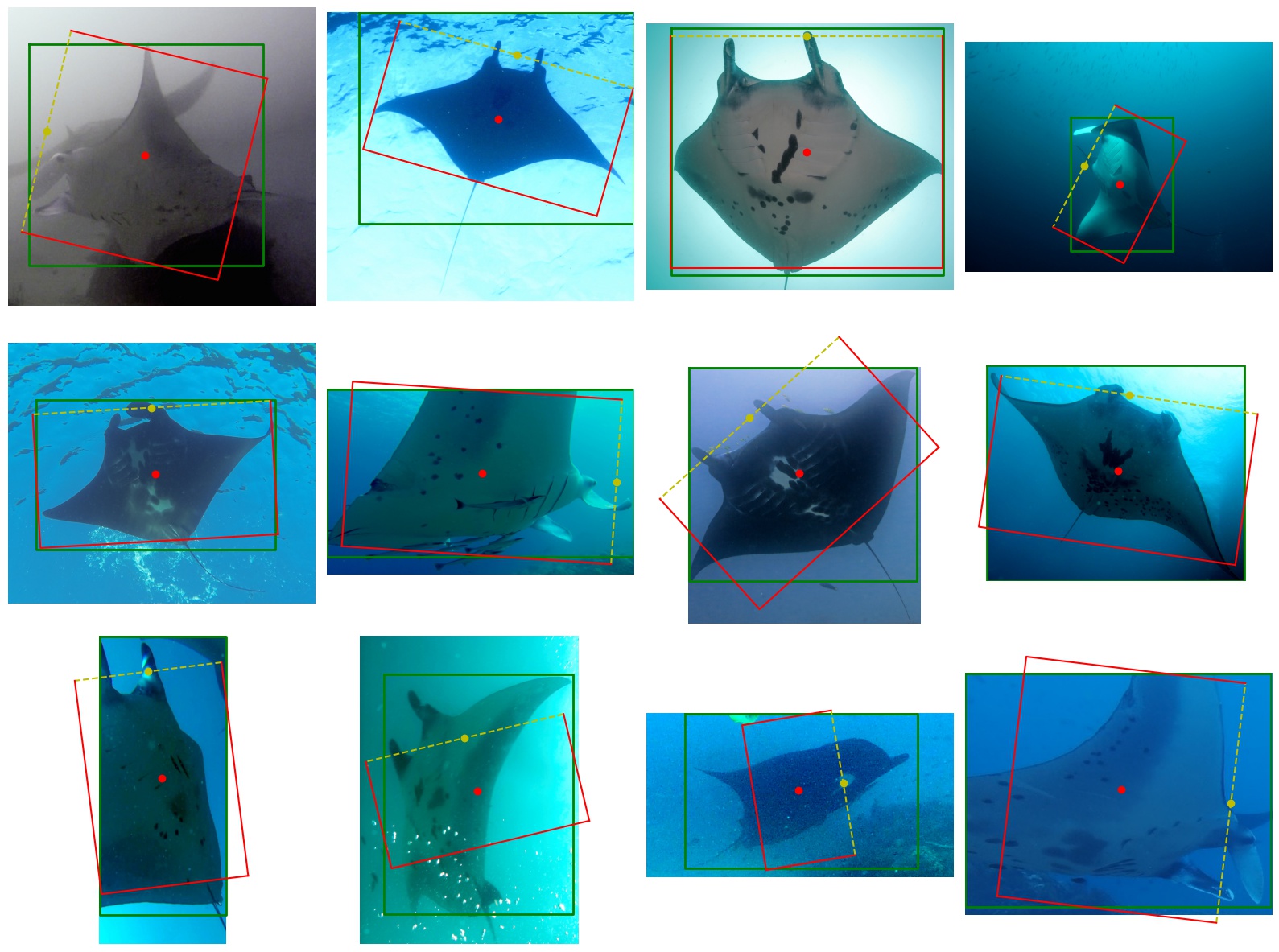

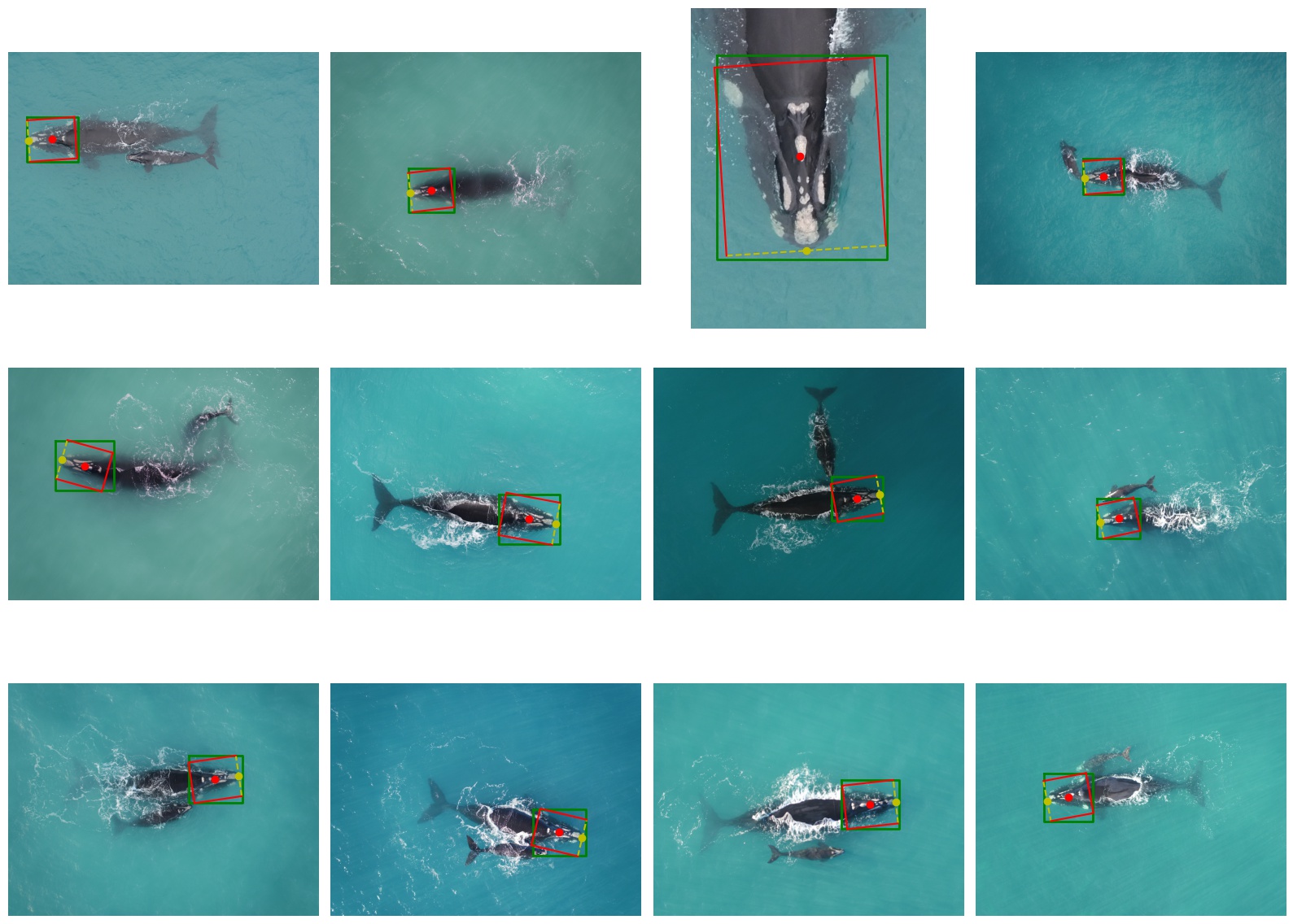

Examples of detected orientation

A green box - axis-aligned detection rectangle provided as input.

A red box with a yellow dashed side - detected oriented object-aligned rectangle.

Spotted dolphins:

Sea turtle heads:

Manta rays:

Right whales:

Image credit: WildMe/WildBook